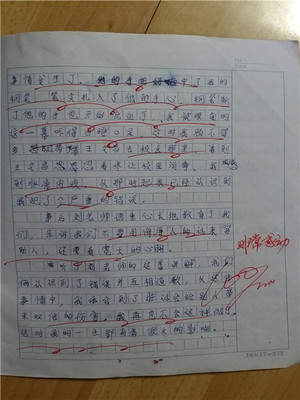

晚上无意中观赏了《A beautifulmind》这不电影,讲述了约翰.纳什这个高智商的孤独男人奋斗的一生,从心理学的角度看完了整部电影,可歌可泣的是,当白发苍苍的老人站在瑞典国的领奖台上的时候,他的视界里、感言中始终是台下容颜不再的妻子,这个用一生的青春、爱与命运博弈,成就纳什博弈均衡理论的智慧大爱的女人。

作为应用数学一个分支,孤独的天才数学家John Nash的gametheory被无数次引经据典的是他的非合作博弈,也就是Nash均衡:

以下Nashequilibrium转自wikipedia(http://en.wikipedia.org/wiki/Nash_equilibrium)

In game theory, the Nash equilibrium (namedafter John Forbes Nash) is a solution concept of a game involving two or more players, inwhich each player is assumed to know the equilibriumstrategies of the other players, and no player has anything togain by changing only his own strategy unilaterally.[1]:14If each player has chosen a strategy and no player can benefit bychanging his or her strategy while the other players keep theirsunchanged, then the current set of strategy choices and thecorresponding payoffs constitute a Nash equilibrium.

Stated simply, Amy and Phil are in Nash equilibrium if Amy ismaking the best decision she can, taking into account Phil'sdecision, and Phil is making the best decision he can, taking intoaccount Amy's decision. Likewise, a group of players are in Nashequilibrium if each one is making the best decision that he or shecan, taking into account the decisions of the others.

Applications:

Game theorists use the Nash equilibrium concept to analyze theoutcome of the strategic interaction of several decision makers. In other words, it providesa way of predicting what will happen if several people or severalinstitutions are making decisions at the same time, and if theoutcome depends on the decisions of the others. The simple insightunderlying John Nash's idea is that we cannot predict the result ofthe choices of multiple decision makers if we analyze thosedecisions in isolation. Instead, we must ask what each player woulddo, taking into account the decision-making of theothers.

Nash equilibrium has been used to analyze hostile situationslike warand arms races[2](see Prisoner's dilemma), and also howconflict may be mitigated by repeated interaction (see Tit-for-tat). It has also been used to study towhat extent people with different preferences can cooperate (seeBattle of the sexes),and whether they will take risks to achieve a cooperative outcome(see Stag hunt). It has been used to study the adoptionof technical standards[citationneeded], and also the occurrence of bankruns and currency crises (see Coordination game). Other applicationsinclude traffic flow (see Wardrop's principle), how to organizeauctions (see auction theory), the outcome of effortsexerted by multiple parties in the education process,[3]regulatory legislation such as environmental regulations (seeTragedy of theCommons),[4]and even penalty kicks in soccer(see Matching pennies).[5]

History:A version of the Nash equilibrium concept was first used byAntoine Augustin Cournot inhis theory of oligopoly (1838). In Cournot's theory, firms choosehow much output to produce to maximize their own profit. However,the best output for one firm depends on the outputs of others. ACournot equilibrium occurs when eachfirm's output maximizes its profits given the output of the otherfirms, which is a pure strategy Nash Equilibrium.

The modern game-theoretic concept of Nash Equilibrium is insteaddefined in terms of mixed strategies, where players choose aprobability distribution over possible actions. The concept of themixed strategy Nash Equilibrium was introduced by John von Neumann and Oskar Morgenstern in their 1944 book TheTheory of Games and Economic Behavior. However, their analysiswas restricted to the special case of zero-sum games. They showed that a mixed-strategyNash Equilibrium will exist for any zero-sum game with a finite setof actions. The contribution of John Forbes Nash in his 1951 articleNon-Cooperative Games was to define a mixed strategy NashEquilibrium for any game with a finite set of actions and provethat at least one (mixed strategy) Nash Equilibrium must exist insuch a game.

Since the development of the Nash equilibrium concept, gametheorists have discovered that it makes misleading predictions (orfails to make a unique prediction) in certain circumstances.Therefore they have proposed many related solution concepts (also called 'refinements'of Nash equilibrium) designed to overcome perceived flaws in theNash concept. One particularly important issue is that some Nashequilibria may be based on threats that are not 'credible'. Therefore, in 1965 Reinhard Selten proposed subgame perfect equilibrium as arefinement that eliminates equilibria which depend on non-credible threats. Other extensionsof the Nash equilibrium concept have addressed what happens if agame is repeated, or what happens if a game is playedin the absence of perfect information. However,subsequent refinements and extensions of the Nash equilibriumconcept share the main insight on which Nash's concept rests: allequilibrium concepts analyze what choices will be made when eachplayer takes into account the decision-making of others.

Informal definition

Informally, a set of strategies is a Nash equilibrium if noplayer can do better by unilaterally changing his or her strategy.To see what this means, imagine that each player is told thestrategies of the others. Suppose then that each player askshimself or herself: "Knowing the strategies of the other players,and treating the strategies of the other players as set in stone,can I benefit by changing my strategy?"

If any player would answer "Yes", then that set of strategies isnot a Nash equilibrium. But if every player prefers not to switch(or is indifferent between switching and not) then the set ofstrategies is a Nash equilibrium. Thus, each strategy in a Nashequilibrium is a best response to all other strategies in thatequilibrium.[6]

The Nash equilibrium may sometimes appear non-rational in athird-person perspective. This is because it may happen that a Nashequilibrium is not Pareto optimal.

The Nash equilibrium may also have non-rational consequences insequential games because players may "threaten" each other withnon-rational moves. For such games the subgame perfect Nashequilibrium may be more meaningful as a tool of analysis.

Formal definition

Let (S, f) be a game with n players, whereSi is the strategy set for player i,S=S1 × S2 ... × Sn is theset of strategy profiles andf=(f1(x), ..., fn(x)) is the payofffunction for x S. Let xi be a strategy profile of playeri and x-i be a strategy profile of allplayers except for player i. When each player i {1, ..., n} chooses strategy xi resulting instrategy profile x = (x1, ..., xn)then player i obtains payoff fi(x). Notethat the payoff depends on the strategy profile chosen, i.e., onthe strategy chosen by player i as well as the strategieschosen by all the other players. A strategy profilex* S is a Nash equilibrium (NE) if no unilateral deviation instrategy by any single player is profitable for that player, thatis

A game can have either a pure-strategy or a mixed Nash Equilibrium, (in the latter a purestrategy is chosen stochastically with a fixed frequency). Nash proved that if we allow mixed strategies, then everygame with a finite number of players in which each player canchoose from finitely many pure strategies has at least one Nashequilibrium.

When the inequality above holds strictly (with instead of ) for all players and all feasible alternative strategies, thenthe equilibrium is classified as a strict Nash equilibrium.If instead, for some player, there is exact equality between and some other strategy in the set , then the equilibrium is classified as a weak Nashequilibrium.

Nash均衡最浅显易懂的例子就是"囚徒困境":Prisoner's dilemma

中文描述如下:一天,一位富翁在家中被杀,财物被盗。警方抓到两个犯罪嫌疑人,斯卡尔菲丝和那库尔斯,并从他们的住处搜出被害人家中丢失的财物。但是,他们矢口否认曾杀过人,辩称是先发现富翁被杀,然后只是顺手牵羊偷了点儿东西。于是警方将两人隔离,分别关在不同的房间进行审讯。由地方检察官分别和每个人单独谈话。检察官说,“由于你们的偷盗罪已有确凿的证据,所以可以判你们一年刑期。但是,我可以和你做个交易。如果你单独坦白杀人的罪行,我只判你三个月的监禁,但你的同伙要被判十年刑。如果你拒不坦白,而被同伙检举,那么你就将被判十年刑,他只判三个月的监禁。但是,如果你们两人都坦白交代,那么,你们都要被判5年刑。”斯卡尔菲丝和那库尔斯该怎么办呢?他们面临着两难的选择——坦白或抵赖。显然最好的策略是双方都抵赖,结果是大家都只被判一年。但是由于两人处于隔离的情况下无法串供。所以,按照亚当·斯密的理论,每一个人都是从利己的目的出发,他们选择坦白交代是最佳策略。因为坦白交代可以期望得到很短的监禁———3个月,但前提是同伙抵赖,显然要比自己抵赖要坐10年牢好。这种策略是损人利己的策略。不仅如此,坦白还有更多的好处。如果对方坦白了而自己抵赖了,那自己就得坐10年牢。太不划算了!因此,在这种情况下还是应该选择坦白交代,即使两人同时坦白,至多也只判5年,总比被判10年好吧。所以,两人合理的选择是坦白,原本对双方都有利的策略(抵赖)和结局(被判1年刑)就不会出现。这样两人都选择坦白的策略以及因此被判5年的结局被称为“纳什均衡”,也叫非合作均衡。因为,每一方在选择策略时都没有“共谋”(串供),他们只是选择对自己最有利的策略,而不考虑社会福利或任何其他对手的利益。也就是说,这种策略组合由所有局中人(也称当事人、参与者)的最佳策略组合构成。没有人会主动改变自己的策略以便使自己获得更大利益。“囚徒的两难选择”有着广泛而深刻的意义。个人理性与集体理性的冲突,各人追求利己行为而导致的最终结局是一个“纳什均衡”,也是对所有人都不利的结局。他们两人都是在坦白与抵赖策略上首先想到自己,这样他们必然要服长的刑期。只有当他们都首先替对方着想时,或者相互合谋(串供)时,才可以得到最短时间的监禁的结果。

见Main article: Prisoner's dilemma

| Cooperate | Defect | |

|---|---|---|

| Cooperate | 3, 3 | 0, 5 |

| Defect | 5, 0 | 1, 1 |

The Prisoner's Dilemma has a similar matrix as depicted for theCoordination Game, but the maximum reward for each player (in thiscase, 5) is only obtained when the players' decisions aredifferent. Each player improves his own situation by switching from"Cooperating" to "Defecting," given knowledge that the otherplayer's best decision is to "Defect." The Prisoner's Dilemma thushas a single Nash Equilibrium: both players choosing to defect.

What has long made this an interesting case to study is the factthat this scenario is globally inferior to "Both Cooperating." Thatis, both players would be better off if they both chose to"Cooperate" instead of both choosing to defect. However, eachplayer could improve his own situation by breaking the mutualcooperation, no matter how the other player possibly (or certainly)changes his decision.

还有信号灯的博弈协调控制:

Coordination game

Main article: Coordination game| Player 2 adopts strategy A | Player 2 adopts strategy B | |

|---|---|---|

| Player 1 adopts strategy A | 4 / 4 | 1 / 3 |

| Player 1 adopts strategy B | 3 / 1 | 2 / 2 |

The coordination game is a classic (symmetric) two player, two strategy game, with an examplepayoff matrix shown to the right. The playersshould thus coordinate, both adopting strategy A, to receive thehighest payoff; i.e., 4. If both players chose strategy B though,there is still a Nash equilibrium. Although each player is awardedless than optimal payoff, neither player has incentive to changestrategy due to a reduction in the immediate payoff (from 2 to1).

A famous example of this type of game was called the Staghunt; in the game two players may choose to hunt a stag or arabbit, the former providing more meat (4 utility units) than thelatter (1 utility unit). The caveat is that the stag must becooperatively hunted, so if one player attempts to hunt the stag,while the other hunts the rabbit, he will fail in hunting (0utility units), whereas if they both hunt it they will split thepayload (2, 2). The game hence exhibits two equilibria at (stag,stag) and (rabbit, rabbit) and hence the players' optimal strategydepend on their expectation on what the other player may do. If onehunter trusts that the other will hunt the stag, he should hunt thestag; however if he suspects that the other will hunt the rabbit,he should hunt the rabbit. This game was used as an analogy forsocial cooperation, since much of the benefit that people gain insociety depends upon people cooperating and implicitly trusting oneanother to act in a manner corresponding with cooperation.

Another example of a coordination game is the setting where twotechnologies are available to two firms with compatible products,and they have to elect a strategy to become the market standard. Ifboth firms agree on the chosen technology, high sales are expectedfor both firms. If the firms do not agree on the standardtechnology, few sales result. Both strategies are Nash equilibriaof the game.

Driving on a road, and having to choose either to drive on theleft or to drive on the right of the road, is also a coordinationgame. For example, with payoffs 100 meaning no crash and 0 meaninga crash, the coordination game can be defined with the followingpayoff matrix:

| Drive on the Left | Drive on the Right | |

|---|---|---|

| Drive on the Left | 100, 100 | 0, 0 |

| Drive on the Right | 0, 0 | 100, 100 |

In this case there are two pure strategy Nash equilibria, whenboth choose to either drive on the left or on the right. If weadmit mixed strategies (where a pure strategy ischosen at random, subject to some fixed probability), then thereare three Nash equilibria for the same case: two we have seen fromthe pure-strategy form, where the probabilities are (0%,100%) forplayer one, (0%, 100%) for player two; and (100%, 0%) for playerone, (100%, 0%) for player two respectively. We add another wherethe probabilities for each player is (50%, 50%).

以及基于网络的Nash均衡:

Network traffic

See also: Braess's ParadoxSample network graph. Values on edges are the travel timeexperienced by a 'car' travelling down that edge. is the number of cars travelling via that edge.An application of Nash equilibria is in determining the expectedflow of traffic in a network. Consider the graph on the right. Ifwe assume that there are "cars" traveling from A to D, what is the expected distribution oftraffic in the network?

This situation can be modeled as a "game" where every travelerhas a choice of 3 strategies, where each strategy is a route from Ato D (either , , or ). The "payoff" of each strategy is the travel time of each route.In the graph on the right, a car travelling via experiences travel time of , where is the number of cars traveling on edge . Thus, payoffs for any given strategy depend on the choices ofthe other players, as is usual. However, the goal in this case isto minimize travel time, not maximize it. Equilibrium will occurwhen the time on all paths is exactly the same. When that happens,no single driver has any incentive to switch routes, since it canonly add to his/her travel time. For the graph on the right, if,for example, 100 cars are travelling from A to D, then equilibriumwill occur when 25 drivers travel via , 50 via , and 25 via . Every driver now has a total travel time of 3.75.

Notice that this distribution is not, actually, sociallyoptimal. If the 100 cars agreed that 50 travel via and the other 50 through , then travel time for any single car would actually be 3.5 whichis less than 3.75. This is also the Nash equilibrium if the pathbetween B and C is removed, which means that adding an additionalpossible route can decrease the efficiency of the system, aphenomenon known as Braess's Paradox.

Competition game

| Player 2 chooses '0' | Player 2 chooses '1' | Player 2 chooses '2' | Player 2 chooses '3' | |

|---|---|---|---|---|

| Player 1 chooses '0' | 0,0 | 2, -2 | 2, -2 | 2, -2 |

| Player 1 chooses '1' | -2, 2 | 1, 1 | 3,-1 | 3, -1 |

| Player 1 chooses '2' | -2, 2 | -1,3 | 2,2 | 4, 0 |

| Player 1 chooses '3' | -2, 2 | -1, 3 | 0, 4 | 3, 3 |

This can be illustrated by a two-player game in which bothplayers simultaneously choose an integer from 0 to 3 and they bothwin the smaller of the two numbers in points. In addition, if oneplayer chooses a larger number than the other, then he/she has togive up two points to the other.

This game has a unique pure-strategy Nash equilibrium: bothplayers choosing 0 (highlighted in light red). Any other choice ofstrategies can be improved if one of the players lowers his numberto one less than the other player's number. In the table to theright, for example, when starting at the green square it is inplayer 1's interest to move to the purple square by choosing asmaller number, and it is in player 2's interest to move to theblue square by choosing a smaller number. If the game is modifiedso that the two players win the named amount if they both choosethe same number, and otherwise win nothing, then there are 4 Nashequilibria (0,0...1,1...2,2...and 3,3).

Nash equilibria in a payoff matrix

There is an easy numerical way to identify Nash equilibria on apayoff matrix. It is especially helpful in two-person games whereplayers have more than two strategies. In this case formal analysismay become too long. This rule does not apply to the case wheremixed (stochastic) strategies are of interest. The rule goes asfollows: if the first payoff number, in the duplet of the cell, isthe maximum of the column of the cell and if the second number isthe maximum of the row of the cell - then the cell represents aNash equilibrium.

| Option A | Option B | Option C | |

|---|---|---|---|

| Option A | 0, 0 | 25, 40 | 5, 10 |

| Option B | 40, 25 | 0, 0 | 5, 15 |

| Option C | 10, 5 | 15, 5 | 10, 10 |

We can apply this rule to a 3×3 matrix:

Using the rule, we can very quickly (much faster than withformal analysis) see that the Nash Equilibria cells are (B,A),(A,B), and (C,C). Indeed, for cell (B,A) 40 is the maximum of thefirst column and 25 is the maximum of the second row. For (A,B) 25is the maximum of the second column and 40 is the maximum of thefirst row. Same for cell (C,C). For other cells, either one or bothof the duplet members are not the maximum of the corresponding rowsand columns.

This said, the actual mechanics of finding equilibrium cells isobvious: find the maximum of a column and check if the secondmember of the pair is the maximum of the row. If these conditionsare met, the cell represents a Nash Equilibrium. Check all columnsthis way to find all NE cells. An N×N matrix may have between 0 andN×N pure strategy Nash equilibria.

Stability

The concept of stability, useful in the analysis of manykinds of equilibria, can also be applied to Nash equilibria.

A Nash equilibrium for a mixed strategy game is stable if asmall change (specifically, an infinitesimal change) inprobabilities for one player leads to a situation where twoconditions hold:

- the player who did not change has no better strategy in the newcircumstance

- the player who did change is now playing with a strictly worsestrategy.

If these cases are both met, then a player with the small changein his mixed-strategy will return immediately to the Nashequilibrium. The equilibrium is said to be stable. If condition onedoes not hold then the equilibrium is unstable. If only conditionone holds then there are likely to be an infinite number of optimalstrategies for the player who changed. John Nash showed that the latter situationcould not arise in a range of well-defined games.

In the "driving game" example above there are both stable andunstable equilibria. The equilibria involving mixed-strategies with100% probabilities are stable. If either player changes hisprobabilities slightly, they will be both at a disadvantage, andhis opponent will have no reason to change his strategy in turn.The (50%,50%) equilibrium is unstable. If either player changes hisprobabilities, then the other player immediately has a betterstrategy at either (0%, 100%) or (100%, 0%).

Stability is crucial in practical applications of Nashequilibria, since the mixed-strategy of each player is notperfectly known, but has to be inferred from statisticaldistribution of his actions in the game. In this case unstableequilibria are very unlikely to arise in practice, since any minutechange in the proportions of each strategy seen will lead to achange in strategy and the breakdown of the equilibrium.

The Nash equilibrium defines stability only in terms ofunilateral deviations. In cooperative games such a concept is notconvincing enough. Strong Nash equilibrium allows fordeviations by every conceivable coalition.[7]Formally, a Strong Nash equilibrium is a Nashequilibrium in which no coalition, taking the actions of itscomplements as given, can cooperatively deviate in a way thatbenefits all of its members.[8]However, the Strong Nash concept is sometimes perceived as too"strong" in that the environment allows for unlimited privatecommunication. In fact, Strong Nash equilibrium has to be Pareto efficient. As a result of theserequirements, Strong Nash is too rare to be useful in many branchesof game theory. However, in games such as elections with many moreplayers than possible outcomes, it can be more common than a stableequilibrium.

A refined Nash equilibrium known as coalition-proof Nashequilibrium (CPNE)[7]occurs when players cannot do better even if they are allowed tocommunicate and make "self-enforcing" agreement to deviate. Everycorrelated strategy supported by iterated strict dominance and on thePareto frontier is a CPNE.[9]Further, it is possible for a game to have a Nash equilibrium thatis resilient against coalitions less than a specified size, k. CPNEis related to the theory of the core.

Finally in the eighties, building with great depth on such ideasMertens-stable equilibriawere introduced as a solution concept. Mertens stable equilibria satisfy bothforward induction and backward induction. In a Gametheory context stable equilibria now usually refer toMertens stable equilibria.

Occurrence

If a game has a unique Nash equilibrium and is played amongplayers under certain conditions, then the NE strategy set will beadopted. Sufficient conditions to guarantee that the Nashequilibrium is played are:

- The players all will do their utmost to maximize their expectedpayoff as described by the game.

- The players are flawless in execution.

- The players have sufficient intelligence to deduce thesolution.

- The players know the planned equilibrium strategy of all of theother players.

- The players believe that a deviation in their own strategy willnot cause deviations by any other players.

- There is common knowledge that all playersmeet these conditions, including this one. So, not only must eachplayer know the other players meet the conditions, but also theymust know that they all know that they meet them, and know thatthey know that they know that they meet them, and so on.

Where the conditions are not met

Examples of game theory problems in which theseconditions are not met:

- The first condition is not met if the game does not correctlydescribe the quantities a player wishes to maximize. In this casethere is no particular reason for that player to adopt anequilibrium strategy. For instance, the prisoner’s dilemma is not adilemma if either player is happy to be jailed indefinitely.

- Intentional or accidental imperfection in execution. Forexample, a computer capable of flawless logical play facing asecond flawless computer will result in equilibrium. Introductionof imperfection will lead to its disruption either through loss tothe player who makes the mistake, or through negation of thecommon knowledge criterionleading to possible victory for the player. (An example would be aplayer suddenly putting the car into reverse in the game of chicken, ensuring a no-loss no-winscenario).

- In many cases, the third condition is not met because, eventhough the equilibrium must exist, it is unknown due to thecomplexity of the game, for instance in Chinese chess.[10]Or, if known, it may not be known to all players, as when playingtic-tac-toe with a small child who desperatelywants to win (meeting the other criteria).

- The criterion of common knowledge may not be met even if allplayers do, in fact, meet all the other criteria. Players wronglydistrusting each other's rationality may adopt counter-strategiesto expected irrational play on their opponents’ behalf. This is amajor consideration in “Chicken” or an armsrace, for example.

Where the conditions are met

Due to the limited conditions in which NE can actually beobserved, they are rarely treated as a guide to day-to-daybehaviour, or observed in practice in human negotiations. However,as a theoretical concept in economics and evolutionary biology, the NE hasexplanatory power. The payoff in economics is utility (or sometimesmoney), and in evolutionary biology gene transmission, both are thefundamental bottom line of survival. Researchers who apply gamestheory in these fields claim that strategies failing to maximizethese for whatever reason will be competed out of the market orenvironment, which are ascribed the ability to test all strategies.This conclusion is drawn from the "stability" theory above. In these situationsthe assumption that the strategy observed is actually a NE hasoften been borne out by research[citationneeded].[verificationneeded]

NE and non-credible threats

Extensive and Normal form illustrations that show the differencebetween SPNE and other NE. The blue equilibrium is not subgameperfect because player two makes a non-credible threat at 2(2) tobe unkind (U).The Nash equilibrium is a superset of the subgame perfect Nashequilibrium. The subgame perfect equilibrium in addition to theNash Equilibrium requires that the strategy also is a Nashequilibrium in every subgame of that game. This eliminates allnon-credible threats, that is,strategies that contain non-rational moves in order to make thecounter-player change his strategy.

The image to the right shows a simple sequential game thatillustrates the issue with subgame imperfect Nash equilibria. Inthis game player one chooses left(L) or right(R), which is followedby player two being called upon to be kind (K) or unkind (U) toplayer one, However, player two only stands to gain from beingunkind if player one goes left. If player one goes right therational player two would de facto be kind to him in that subgame.However, The non-credible threat of being unkind at 2(2) is stillpart of the blue (L, (U,U)) Nash equilibrium. Therefore, ifrational behavior can be expected by both parties the subgameperfect Nash equilibrium may be a more meaningful solution conceptwhen such dynamic inconsistencies arise.

Proof of existence

Proof using the Kakutani fixed point theorem

Nash's original proof (in his thesis) used Brouwer's fixed pointtheorem (e.g., see below for a variant). We give a simpler proofvia the Kakutani fixed point theorem,following Nash's 1950 paper (he credits DavidGale with the observation that such a simplification ispossible).

To prove the existence of a Nash Equilibrium, let be the best response of player i to the strategies of all otherplayers.

Here, , where , is a mixed strategy profile in the set of all mixed strategiesand is the payoff function for player i. Define a set-valued function such that . The existence of a Nash Equilibrium is equivalent to having a fixed point.

Kakutani's fixed point theorem guarantees the existence of afixed point if the following four conditions are satisfied.

- is compact, convex, and nonempty.

- is nonempty.

- is convex.

- is upper hemicontinuous

Condition 1. is satisfied from the fact that is a simplex and thus compact. Convexity follows from players'ability to mix strategies. is nonempty as long as players have strategies.

Condition 2. is satisfied because players maximize expectedpayoffs which is continuous function over a compact set. TheWeierstrass Extreme Value Theorem guarantees thatthere is always a maximum value.

Condition 3. is satisfied as a result of mixed strategies.Suppose , then . i.e. if two strategies maximize payoffs, then a mix between thetwo strategies will yield the same payoff.

Condition 4. is satisfied by way of Berge's maximum theorem. Because is continuous and compact, is upper hemicontinuous.

Therefore, there exists a fixed point in and a Nash Equilibrium.[11]

When Nash made this point to John von Neumann in 1949, von Neumannfamously dismissed it with the words, "That's trivial, you know.That's just a fixed point theorem." (See Nasar,1998, p.94.)

Alternate proof using the Brouwer fixed-pointtheorem

We have a game where is the number of players and is the action set for the players. All of the action sets are finite. Let denote the set of mixed strategies for the players. The finitenessof the s ensures the compactness of .

We can now define the gain functions. For a mixed strategy , we let the gain for player on action be

The gain function represents the benefit a player gets byunilaterally changing his strategy. We now define where

for . We see that

We now use to define as follows. Let

for . It is easy to see that each is a valid mixed strategy in . It is also easy to check that each is a continuous function of , and hence is a continuous function. Now is the cross product of a finite number of compact convex sets,and so we get that is also compact and convex. Therefore we may apply the Brouwerfixed point theorem to . So has a fixed point in , call it .

I claim that is a Nash Equilibrium in . For this purpose, it suffices to show that

This simply states the each player gains no benefit byunilaterally changing his strategy which is exactly the necessarycondition for being a Nash Equilibrium.

Now assume that the gains are not all zero. Therefore, , , and such that . Note then that

So let . Also we shall denote as the gain vector indexed by actions in . Since we clearly have that . Therefore we see that

Since we have that is some positive scaling of the vector . Now I claim that

.To see this, we first note that if then this is true by definition of the gain function. Now assumethat . By our previous statements we have that

and so the left term is zero, giving us that the entireexpression is as needed.

So we finally have that

where the last inequality follows since is a non-zero vector. But this is a clear contradiction, so allthe gains must indeed be zero. Therefore is a Nash Equilibrium for as needed.

Computing Nash equilibria

If a player A has a dominant strategy then there exists a Nash equilibrium in which A plays . In the case of two players A and B, there exists a Nashequilibrium in which A plays and B plays a best response to . If is a strictly dominant strategy, A plays in all Nash equilibria. If both A and B have strictly dominantstrategies, there exists a unique Nash equilibrium in which eachplays his strictly dominant strategy.

In games with mixed strategy Nash equilibria, the probability ofa player choosing any particular strategy can be computed byassigning a variable to each strategy that represents a fixedprobability for choosing that strategy. In order for a player to bewilling to randomize, his expected payoff for each strategy shouldbe the same. In addition, the sum of the probabilities for eachstrategy of a particular player should be 1. This creates a systemof equations from which the probabilities of choosing each strategycan be derived.[6]

Examples

| Player B plays H | Player B plays T | |

|---|---|---|

| Player A plays H | −1, +1 | +1, −1 |

| Player A plays T | +1, −1 | −1, +1 |

In the matching pennies game, player A loses a point to B if Aand B play the same strategy and wins a point from B if they playdifferent strategies. To compute the mixed strategy Nashequilibrium, assign A the probability p of playing H and (1−p) ofplaying T, and assign B the probability q of playing H and (1−q) ofplaying T.

- E[payoff for A playing H] = (−1)q + (+1)(1−q) = 1−2q

- E[payoff for A playing T] = (+1)q + (−1)(1−q) = 2q−1

- E[payoff for A playing H] = E[payoff for A playing T] ⇒ 1−2q =2q−1 ⇒ q = 1/2

- E[payoff for B playing H] = (+1)p + (−1)(1−p) = 2p−1

- E[payoff for B playing T] = (−1)p + (+1)(1−p) = 1−2p

- E[payoff for B playing H] = E[payoff for B playing T] ⇒ 2p−1 =1−2p ⇒ p = 1/2

Thus a mixed strategy Nash equilibrium in this game is for eachplayer to randomly choose H or T with equal probability.

关于Nash的生平:From Wikipedia, the freeencyclopedia

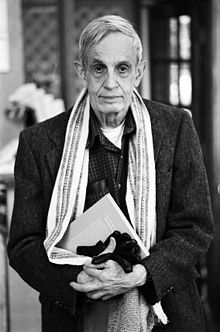

JohnForbes Nash, Jr.

| John Forbes Nash, Jr. | |

|---|---|

| Born | June13, 1928 (age84) Bluefield, West Virginia,U.S. |

| Residence | United States |

| Nationality | American |

| Fields | Mathematics, Economics |

| Institutions | MassachusettsInstitute of Technology Princeton University |

| Alma mater | Princeton University, Carnegie Institute ofTechnology (now part of Carnegie Mellon University) |

| Doctoral advisor | Albert W. Tucker |

| Knownfor | Nash equilibrium Nash embedding theorem Algebraic geometry Partialdifferential equations |

| Notable awards | Nobel MemorialPrize in Economic Sciences (1994) |

| Spouse | Alicia Lopez-Harrison de Lardé (m. 1957–1963;2001–present) |

John Forbes Nash, Jr. (born June 13, 1928) is an Americanmathematician whose works in gametheory, differential geometry, and partialdifferential equations have provided insight into the forcesthat govern chance and events inside complex systems in daily life.His theories are used in market economics,computing, evolutionary biology, artificial intelligence, accounting,politics and military theory. Serving as a Senior ResearchMathematician at Princeton University during the latterpart of his life, he shared the 1994 Nobel MemorialPrize in Economic Sciences with game theorists Reinhard Selten and John Harsanyi.

Nash is the subject of the Hollywood movie A Beautiful Mind. The film,loosely based on the biography of the same name, focuseson Nash's mathematical genius and struggle with paranoidschizophrenia.[1][2]

In his own words, he states,

- I later spent times of the order of five to eight months inhospitals in New Jersey, always on an involuntary basis and alwaysattempting a legal argument for release. And it did happen thatwhen I had been long enough hospitalized that I would finallyrenounce delusional hypotheses and revert to thinking of myself asa human of more conventional circumstances and return tomathematical research. In these interludes of, as it were, enforcedrationality, I did succeed in doing some respectable mathematicalresearch. Thus there came about the research for "Le problème de Cauchy pour les équationsdifférentielles d'un fluide général"; the idea that Prof. Hironakacalled "the Nash blowing-up transformation"; and those of "ArcStructure of Singularities" and "Analyticity of Solutions ofImplicit Function Problems with Analytic Data".

- But after my return to the dream-like delusional hypotheses inthe later 60's I became a person of delusionally influencedthinking but of relatively moderate behavior and thus tended toavoid hospitalization and the direct attention ofpsychiatrists.

- Thus further time passed. Then gradually I began tointellectually reject some of the delusionally influenced lines ofthinking which had been characteristic of my orientation. Thisbegan, most recognizably, with the rejection ofpolitically-oriented thinking as essentially a hopeless waste ofintellectual effort.[3]

Early lifeand career

Nash was born on June 13, 1928, in Bluefield, West Virginia. Hisfather, after whom he is named, was an electrical engineer for theAppalachian Electric PowerCompany. His mother, born Margaret Virginia Martin and known asVirginia, had been a schoolteacher before she married. Both parentspursued opportunities to supplement their son's education,providing him with encyclopedias and even allowing him to takeadvanced mathematics courses at a local college while still in highschool. After attending Carnegie Institute ofTechnology (now Carnegie Mellon University) andgraduating in 1948 with bachelor's and master's degrees inmathematics, he accepted a scholarship to Princeton Universitywhere he pursued his graduate studies in Mathematics.[3]

Post-graduate career

Nash's advisor and former Carnegie Tech professor R.J. Duffinwrote a letter of recommendation consisting of a single sentence:"This man is a genius."[4]Nash was accepted by Harvard University, but the chairman ofthe mathematics department of Princeton, Solomon Lefschetz, offered him the John S. Kennedyfellowship, which was enough to convince Nash that Harvard valuedhim less.[5]Thus he went to Princeton where he worked on his equilibrium theory. He earned a doctorate in 1950 with a 28-pagedissertation onnon-cooperative games.[6]The thesis, which was written under the supervision of Albert W. Tucker, contained the definitionand properties of what would later be called the "Nash equilibrium". These studies led to fourarticles:

Nash did ground-breaking work in the area of real algebraic geometry:

His work in mathematics includes the Nash embedding theorem, which showsthat any abstract Riemannian manifold can be isometrically realized as a submanifold of Euclidean space. He also made significantcontributions to the theory of nonlinear parabolic partialdifferential equations and to singularity theory.

In the book A Beautiful Mind, author Sylvia Nasarexplains that Nash was working on proving a theorem involving ellipticpartial differential equations when, in 1956, he suffered a severedisappointment when he learned of an Italian mathematician,Ennio de Giorgi, who had published a proof acouple of months[vague]before Nash achieved his proof. Each took different routes to getto their solutions. The two mathematicians met each other at theCourant Institute of Mathematical Sciences of New York University during the summer of1956. It has been speculated that if only one of them had solvedthe problem, he would have been given the Fields Medal for the proof.[3]

In 2011, the National Security Agencydeclassified letters written by Nash in 1950s, in which he hadproposed a new encryption-decryption machine.[8]The letters show that Nash had anticipated many concepts of moderncryptography, which are based on computationalhardness.[9]

Personallife

In 1951, Nash went to the MassachusettsInstitute of Technology as a C. L. E. Moore Instructor in themathematics faculty. There, he met Alicia Lopez-Harrison de Lardé(born January 1, 1933), a physicsstudent from El Salvador, whom he married in February1957 at a Catholic ceremony, although Nash was an atheist.[10]She admitted Nash to a mental hospital in 1959for schizophrenia; their son, John Charles MartinNash, was born soon afterward, but remained nameless for a yearbecause his mother felt that her husband should have a say in thename.

Nash and de Lardé divorced in 1963, though after his finalhospital discharge in 1970 Nash lived in de Lardé's house. Theywere remarried in 2001.

Nash has been a longtime resident of West Windsor Township,New Jersey.[11]

Mentalillness

Schizophrenia

Nash began to show signs of extreme paranoia and his wife later described his behavioras erratic, as he began speaking of characters like Charles Hermanand William Parcher who were putting him in danger. Nash seemed tobelieve that all men who wore red ties were part of a communistconspiracy against him. Nash mailed letters to embassies inWashington, D.C., declaring that they wereestablishing a government.[12][13]

He was admitted to the McLean Hospital, April–May 1959, where he wasdiagnosed with paranoidschizophrenia. The clinical picture is dominated by relativelystable, often paranoid, fixed beliefs that are either false,over-imaginative or unrealistic, usually accompanied by experiencesof seemingly real perception of something not actuallypresent— particularly auditory and perceptionaldisturbances, a lack of motivation for life, and mild clinicaldepression.[14]Upon his release, Nash resigned from MIT, withdrew his n,and went to Europe, unsuccessfully seeking political asylum inFrance and East Germany. He tried to renounce his U.S.citizenship. After a problematic stay in Parisand Geneva, he was arrested by the French police anddeported back to the United States at the request of the U.S.government.

In 1961, Nash was committed to the New Jersey State Hospital at Trenton. Over thenext nine years, he spent periods in psychiatric hospitals, where, aside fromreceiving antipsychotic medications, he was administered insulin shock therapy.[14][15][16]

Although he sometimes took prescribed medication, Nash laterwrote that he only ever did so under pressure. After 1970, he wasnever committed to the hospital again and he refused anymedication. According to Nash, the film A Beautiful Mind inaccuratelyimplied that he was taking the new atypical antipsychotics during thisperiod. He attributed the depiction to the screenwriter (whosemother, he notes, was a psychiatrist), who was worried aboutencouraging people with the disorder to stop taking theirmedication.[17]Others, however, have questioned whether the fabrication obscured akey question as to whether recovery from problems like Nash's canactually be hindered by such drugs,[18]and Nash has said they are overrated and that the adverse effectsare not given enough consideration once someone is deemed mentally ill.[19][20][21]According to Sylvia Nasar, author of the book A Beautiful Mind, on which themovie was based, Nash recovered gradually with the passage of time.Encouraged by his then former wife, de Lardé, Nash worked in acommunitarian setting wherehis eccentricities were accepted. De Lardé said of Nash, "it's justa question of living a quiet life".[13]

Nash in November 2006 at a game theory conference in Cologne.Nash dates the start of what he terms "mental disturbances" tothe early months of 1959 when his wife was pregnant. He hasdescribed a process of change "from scientific rationality ofthinking into the delusional thinkingcharacteristic of persons who are psychiatrically diagnosed as'schizophrenic' or 'paranoid schizophrenic'"[22]including seeing himself as a messenger or having a specialfunction in some way, and with supporters and opponents and hiddenschemers, and a feeling of being persecuted, and looking for signsrepresenting divine revelation.[23]Nash has suggested his delusional thinking was related to hisunhappiness and his striving to feel important and be recognized,and to his characteristic way of thinking such that "I wouldn'thave had good scientific ideas if I had thought more normally." Hehas said, "If I felt completely pressureless I don't think I wouldhave gone in this pattern".[24]He does not see a categorical distinction between terms such asschizophrenia and bipolar disorder.[25]Nash reports that he did not hear voices until around 1964, laterengaging in a process of rejecting them.[26]He reports that he was always taken to hospitals against his will,and only temporarily renounced his "dream-like delusionalhypotheses" after being in a hospital long enough to decide tosuperficially conform - to behave normally or to experience"enforced rationality". Only gradually on his own did he"intellectually reject" some of the "delusionally influenced" and"politically-oriented" thinking as a waste of effort. However, by1995, although he was "thinking rationally again in the style thatis characteristic of scientists," he says he also felt morelimited.[22][27]

Recognition and latercareer

At Princeton, campus legend Nash became "The Phantom of FineHall" (Princeton's mathematics center), a shadowy figure who wouldscribble arcane equations on blackboards in the middle of thenight. The legend appears in a work of fiction based on Princetonlife, The Mind-Body Problem, by Rebecca Goldstein.

In 1978, Nash was awarded the John von Neumann Theory Prizefor his discovery of non-cooperative equilibria, now calledNash equilibria. He won the Leroy P. Steele Prize in 1999.

In 1994, he received the Nobel MemorialPrize in Economic Sciences (along with John Harsanyi and Reinhard Selten) as a result of his gametheory work as a Princeton graduate student. In the late 1980s,Nash had begun to use email to gradually link with workingmathematicians who realized that he was the John Nash andthat his new work had value. They formed part of the nucleus of agroup that contacted the Bank of Sweden's Nobelaward committee and were able to vouch for Nash's mental healthability to receive the award in recognition of his earlywork.[citationneeded]

As of 2011 Nash's recent work involves ventures in advanced gametheory, including partial agency, which show that, as in his earlycareer, he prefers to select his own path and problems. Between1945 and 1996, he published 23 scientific studies.

Nash has suggested hypotheses on mental illness. He hascompared not thinking in an acceptable manner, or being "insane"and not fitting into a usual social function, to being "on strike" from an economic point of view. He hasadvanced evolutionary psychology views aboutthe value of human diversity and the potential benefits ofapparently nonstandard behaviors or roles.[28]

Nash has developed work on the role of money in society. Withinthe framing theorem that people can be so controlled and motivatedby money that they may not be able to reason rationally about it,he has criticized interest groups that promote quasi-doctrinesbased on Keynesian economics that permitmanipulative short-term inflation and debt tacticsthat ultimately undermine currencies. He has suggested a global"industrial consumption price index" system that would support thedevelopment of more "ideal money" that people could trust ratherthan more unstable "bad money". He notes that some of his thinkingparallels economist and political philosopher Friedrich Hayek's thinking regarding moneyand a nontypical viewpoint of the function of theauthorities.[29][30]

Nash received an honorary degree, Doctor of Science andTechnology, from Carnegie Mellon University in1999, an honorary degree in economics from the University of NaplesFederico II on March 19, 2003,[31]an honorary doctorate in economics from the University ofAntwerp in April 2007, and was keynote speaker at a conferenceon Game Theory. He has also been a prolific guest speaker at anumber of world-class events, such as the Warwick Economics Summit in 2005held at the University of Warwick.

Nash has an Erdős number of 3.[32][33][34]

爱华网

爱华网